The Essence of Writing (with AI) is the Taking of Responsibility

As an educator, I can attest that generative AI has indeed changed everything. ChatGPT can write essays, write code, solve math problems, analyze cases and documents, analyze datasets, come up with creative ideas, and answer exam questions, all at a level more advanced than most undergraduate students. And all of our students have access to it for free.

This has resulted in a frenzy among university professors and higher education institutions. The primary concern that has attracted the most attention has been academic integrity, or in other words, the ability of students to cheat in assessments using generative AI. Many have tried to ban or restrict it, many have tried (and failed) to use "AI detectors" to enforce such restrictions, and many have changed their forms of assessment: online has moved to in-person, essays (which were typically take-home assignments) have been declared dead, and in-person paper-based exams are back in vogue.

Now my view on this in general is that such a focus on cheating should not be our primary concern, and generally misses the mark on the way that the entire world is shifting under our feet. We should be thinking about what generative AI means for the trajectory of our species, and radically rethinking the role of higher education institutions. We need to be thinking about the new society being shaped by generative AI and how to best prepare ourselves and the next generation for it. This is why I launched the "Generative AI and Prompting" course at the Haskayne School of Business, University of Calgary.

However, I do agree that figuring out our systems of education, including assessment, is an important part of preparing our students for the future. So in this essay I'm going to tackle this high-urgency issue about "cheating with AI" head on, by sharing some thoughts that have helped me deal with it as a university professor, and that my colleagues have found useful. I don't have an answer for everything, but I will try to add something to the conversation.

AI-Generated Text is (Mostly) Not Plagiarism

To get the basics out of the way, we have to understand that AI-generated text using Large Language Models is not plagiarism in most definitions of the word. Although LLMs are trained in a vast amount of text previously written by others, it does not verbatim reproduce that text in its output (except for rare cases). Instead, it takes the patterns of writing it has learned in its training and uses them to produce original novel text. That text is only in the form of meaningless electronic bits of data until someone reads it and ascribes meaning to it.

AI-generated text could be considered as plagiarism with some definitions of the word. For example I define it in my own plagiarism workshops for graduate students as "presenting work as original when it is not original relative to the expectation of originality in a given context." According to this definition if the expectation of originality in a class is to not use AI, then using AI can be considered plagiarism.

Oxford university uses the wording of "presenting work or ideas from another source as your own" to define plagiarism, which can be interpreted as relaxing the requirement of human agency behind the original "source." However, I think there is a difference between a source that another human has previously given meaning to, and a source like original AI-produced content that no human has read before.

For example, consider the title of the book "You Look like a Thing and I Love You" by Janelle Shane. It actually sounds like quite a creative phrase, until you read two pages into the book and realize that this was part of a series of AI-generated pickup lines that were mostly so bad they were barely intelligible and almost all grammatically incoherent. But this one phrase turned out OK, at least grammatically. Janelle Shane decided to ascribe meaning to it, taking it out of its original context and interpreting it as meaningful and as written with intent. Is it plagiarism for her to use it as the title of her book? I think not, because she has put in the effort to take ownership and responsibility for this phrase as the title that she wants to give her book. In this example, the author provides proper acknowledgement by describing where this phrase comes from in the book, but even if she hadn't done that, I think presenting this phrase as her own work would not have been plagiarism.

The Link between Agency and Responsibility

AI does not have agency but it can fool you into thinking it does. Generative AI has an uncanny ability to exude the illusion of agency because it mimics human patterns of thinking. This is important because responsibility can only be attributed to agency. We cannot place the burden of responsibility on AI. This is why I don't believe in listing AI as a co-author, and why courts do not accept companies blaming the AI for making promises they didn't want to honor. This academic journal for example specifies that:

"Artificial Intelligence (AI) and AI-assisted technologies should not be listed as an author or co-author as authorship implies responsibilities and tasks that can only be attributed to and performed by humans."

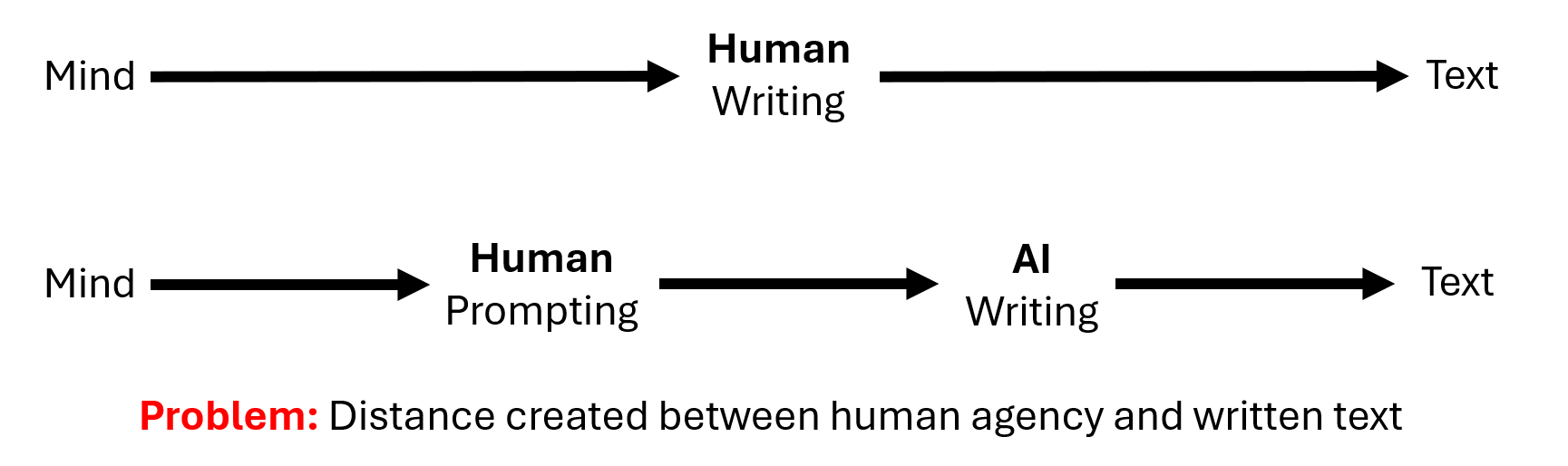

When we write something with AI, we create a new distance between our decisions and actions, and the written output. While in traditional writing we put the words together ourself, in AI-assisted writing we give an input prompt and the AI puts together the words. This creates a distance between our agency and the written document, making us feel less personally responsible for it. We feel less agency and are thus inclined (mistakenly) to attribute that loss of agency to the AI's (non-existent) agency. This is dangerous because there is no other entity stepping in to take on the burden of responsibility that we are inclined to offload from ourselves. The solution, in my opinion, is to recognize that the responsibility is still fully ours, and to mitigate that new distance between agency and responsibility by actively claiming back the responsibility with intention.

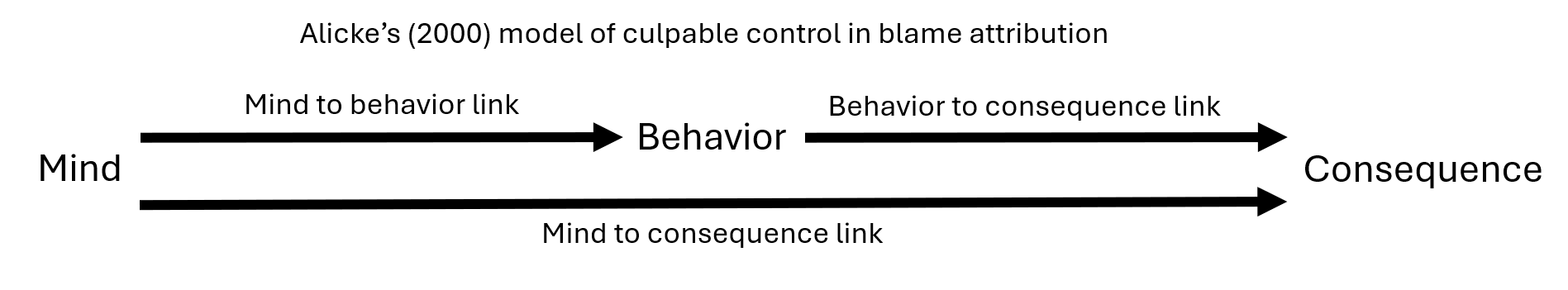

A Culpable Control Model of AI-Generated Writing

The framework of culpable control in blame attribution theory can help explain the situation. Anything we do in this world starts with intention in our mind, that leads to a behavior or action we take, which then leads to a consequence or outcome. The more concrete the connections are between mind-to-behavior and behavior-to-consequence, the more we feel a personal sense of responsibility for the consequence. Conversely, the more we feel that the consequence is not really what we planned or acted toward, the less we feel like we had culpable control over the consequence.

When we use generative AI to produce writing that we previously had to write ourself, we are increasing the distance between our actions and the outcome, hence creating a dissociation between our agency and our sense of responsibility for the outcome.

But I believe that it is possible, and necessary, to take steps to mitigate this dissociation and actively take back our sense of culpable control over the consequences of our use of AI. Below I outline what this could look like.

RRUYO: Read and Revise Until You Own

In discussions of plagiarism we say "copy-pasting" is wrong but "paraphrasing" is OK. Because by paraphrasing we "put it into our own words." We take ownership of the words.

When dealing with AI-generated text, I think you don't necessarily need to change the wording, but you still need to actively take ownership of the words, and more often than not, this does involve making some changes to the wording as well. So while taking ownership of AI-generated text may involve simply reading and internalizing it and deciding that this is in fact what you want to say, in most cases it will require some revising to make it really feel like your words. This is what I call RRUYO: Read and Revise Until You Own.

Again I emphasize that it is technically possible that just by reading, and without editing, you will internalize the text and feel like it is what you want to say. In which case I think it is fine to copy-paste from AI-generated text. But the longer the text is, the more likely it is that you will not truly feel like you "own" it if you just copy-paste without editing, and the more likely it is that others will sense that the text was AI-generated. This is why I emphasize the second R in RRUYO.

The Careless Distribution of Slop Text is a Problem

I think it is fair to call text that is generated by AI but not read or internalized by a human as "slop text". For the first time in history, generative AI gives people the ability to expose others to slop texts and slop thoughts that no one has taken responsibility for. For the first time in history, the reader of a text, and not the writer, may be the first person to "think" the text and ascribe meaning to it. This has implications we have to deal with.

I think in most cases it is unethical and even dangerous to send slop text to anyone, although I can imagine it having some valuable uses in automation if done responsibly. For the most part, carelessly sending AI-generated slop text to others without disclosing that it is AI-generated, is problematic and damaging. In order for AI-generated text to be responsibly conveyed to others it must be read and revised until it is internalized by the sender. I believe this is a valuable social norm that society will eventually decide to take more seriously. We are already seeing the potential cost of ignoring it.

The Social Problem of Content Authenticity Anxiety

We are all realizing that any text we see written after 2022 has the potential to be entirely or heavily AI-generated. As we are all becoming more proficient with generative AI tools, we are also better able to sense AI-generated writing at a high degree of confidence. But we are never fully sure. This has resulted in a social problem of constant paranoia and suspicion over the authenticity of text, and whether what we read or hear was actually intentionally produced and internalized by a human before it reached us. Stanford professor Victor Lee calls this "the looming authenticity crisis".

Every deliverable that a professor or a client or a boss or supervisor receives now is met with the thought "I wonder how much of this is AI-generated." Especially if there is something suspicious like the use of certain words, formatting, or style that are common in AI-generated outputs, it will naturally spark doubt and suspicion. I personally have already made judgements that others were sending me AI-generated output without taking responsibility for it, for example when a message started with "Hi Mohammad, my name is {my_name}." But I also recently experienced the other side of this coin when someone tried to discredit my contribution to a project by suggesting that it may have been AI-generated (it was not), even though I was taking full responsibility for it.

All this goes to show that there is now a very real social problem of content authenticity anxiety. It is an unhealthy thing for our society, but unfortunately a reality. This kind of problem leads to a generalized distrust in society that is known to be damaging. If we do not take the idea of internalizing AI-generated text seriously, and do not seriously enforce the social norm of RRUYO, we risk falling into an ever-deeper chasm of societal paranoia. I believe we must refrain from exposing others to AI-generated text we haven't internalized and taken ownership of, and we must expect the same of others. And this is what we must teach our students.

The Social Contract of Writing

When you send someone else writing that is presented as yours, you are signing a social contract that says I hereby take responsibility for this writing. This concept of a social contract in authorship underscores the implicit agreement between the writer and the reader, the sender and the receiver of communication, that the content delivered is not only owned but also endorsed by the writer. This social contract plays an important role in the cohesion of our society, and we ought to protect it.

You cannot expect anyone to read your content or take it seriously if you do not take full responsibility for it. If multiple sentences seem like typical ChatGPT-style writing, it is very likely that the reader will lose interest in your writing. If you somehow manage to keep them engaged, that could be even worse because now you are engaging readers with a false promise.

I think two extremes are acceptable: either you copy and paste the entire text from AI and just declare that it is AI-generated text, or you RRUYO and take full responsibility for the text (in which case you do not need to mention AI at all). Anything in between is, in my opinion, a failure to take full responsibility for something that others are considering to be your writing.

An example of such an unethical practice that I believe breaches the social contract is happening with applications of generative AI in marketing. Marketers are sending mass customized cold emails without any human having read the content first and approving it for communication, and without disclosing in the email that the content is AI generated. Communicating AI-generated text to others without first reading it yourself is akin to signing a contract you haven't read. A person who signs a contract is presumed to have read and understood it.

Students Must Shoulder the Consequences of Their Writing

Based on all the above, if you are an educator who wants to continue using writing assignments as a form of assessment, I have an important recommendation: Make writing matter! Make students truly feel the weight of their writing. Make their writing have consequences beyond just a grade, and make them truly bear the pain and suffer the consequences of poor writing.

Assessments involving writing should be adapted so that students feel the full weight of the responsibility that they bear for the content that they communicate. The traditional approach of simply getting a grade (possibly with brief feedback) just does not cut it in most cases. Being able to get a B- on an essay that took zero effort may be considered a "win" from the perspective of the cheating student, so giving a letter grade and moving on is not enough.

First, the standards for a B- should be much higher now that students are equipped with AI, but second, the ways in which students receive feedback or experience consequences for their writing should be more in-depth and consequential, priming them to truly shoulder the responsibility for every sentence of writing that they submit.

My recommendation for how to achieve this is to emphasize all the ways in which words matter in our society and simulate contexts in which the wording of a document can make enormous differences in society. Contexts in which getting something wrong or not wording it properly can have grace consequences and real costs. Such realistic contexts are emphasized in the literature on "Authentic Assessment" as well, but my point is to deliberately choose authentic contexts that are high stakes.

In practice, you can surprise students with how seriously you take their text and how much you expect of it. Put the text to work in multiple ways, put it under peer review, put it under AI review, discuss it in front of others, simulate and role play social situations in class where the text makes a tangible and critical impact such as medical or legal settings. I personally like the idea of requiring that students publicly publish their work, for example as a blog post, because of the high stakes involved in putting their personal reputation on the line in front of a public audience, rather than just the teacher.

Conclusion

Your writing is what you take responsibility for. In fact, this argument can be generalized to any form of content creation. The point is that in the age of generative AI, generating content is not hard, and is also not meaningful, unless a human takes ownership and responsibility for it.

AI has made it clear that it is not the putting of words next to each other that is the essence of writing, but taking responsibility for what is ultimately communicated to others. AI does not need to be cited, or even acknowledged in my opinion, if you take full ownership and responsibility for the text produced after reading it and revising it to the point of internalization.

Citing AI as a co-author goes against the idea of taking responsibility. It stems from the same notion that AI has its own agency and the human can shift some burden of responsibility to it. AI-generated content must be declared as such only if you do not take full responsibility for it. Disclosing that you used AI to produce content is an admission that you do not take full responsibility for it, and is mostly unnecessary if you do take responsibility. But failing to disclose AI use for slop text that is sent to others is mostly unethical and will contribute to the rise of authenticity anxiety and generalized distrust in society.

Member discussion